f you don't ask, you'll never know. While this adage applies to countless situations, it's perfectly suited to the exhibit environment. If you don't ask customers and prospects what they think - about everything from your products, to your brand, to your exhibit staffers - you'll never know. And if you don't query your customers as least once in a while, your program will be running blind, leaving you guessing about where you could have made improvements to obtain greater success, or worse yet, where you went horribly wrong. f you don't ask, you'll never know. While this adage applies to countless situations, it's perfectly suited to the exhibit environment. If you don't ask customers and prospects what they think - about everything from your products, to your brand, to your exhibit staffers - you'll never know. And if you don't query your customers as least once in a while, your program will be running blind, leaving you guessing about where you could have made improvements to obtain greater success, or worse yet, where you went horribly wrong.

Given the importance of attendee surveys, you can't just slap one together on a whim. Attendees spend precious little time in your booth to begin with, and getting them to stop and talk to you long enough to complete an in-booth survey is a challenge in itself. So when you snare a willing survey participant, it's not only critical that the survey provides the accurate, relevant data you need, but that it's easy enough to complete that attendees won't bail out before finishing it.

If you want a highly statistical survey that's dead-on accurate, there are many variables you'll need to consider. In this case, survey writing should probably be left to the professionals your first time out of the gate. However, if you're looking for general feedback with minimal room for error and minimal costs to you, you can still write your own survey if you follow a few simple recommendations and avoid a few careless mistakes.

Here are 10 of the most important - and most often violated - survey-writing guidelines. Heed them, and you'll create an exhibit survey that'll put your finger on the pulse of your customers. Ignore them, and you'll likely bypass countless opportunities to improve your survey - and maybe even your exhibit program.

Limit the scope of the survey. Limit the scope of the survey.

Before writing the survey, figure out exactly what you need to learn from it. Then rate the information as "need to know" and "nice to know." My experience in survey development has shown that surveys with between six and eight questions get the highest response rates from attendees. So narrow your "need to know" items to eight items or less, and if you don't have six to eight "need to know" questions, toss in the most important "nice to know" questions to fill out the survey.

Use brief, tightly focused questions. Use brief, tightly focused questions.

Formulate the survey questions with plain, direct language to allow respondents to answer quickly and accurately. Clarify any terms that might be open

to misinterpretation, and avoid using acronyms when possible.

Let's say you ask this question: "How many times have you seen or talked to your doctor about your health in the past two weeks?" Before answering your question, participants are likely to ask themselves questions such as: "Does 'doctor' mean my ophthalmologist or psychiatrist as well? If I talked about a broken bone, does that qualify as talking about my health? If I talked to a nurse and not a doctor, does that count?" A more direct question might be: "How many times in the past two weeks have you spoken directly to your primary physician about your asthma?"

Include simple instructions. Include simple instructions.

You'd be surprised how often people forget to include completion instructions on their complex surveys. When necessary, include instructions on how a question is to be answered, such as the number of allowable answers, or explanations about scales or rankings. Also, if you don't need to tie responses to individuals, informing attendees of the anonymity of responses can often improve the number and validity of responses you capture.

Provide space for additional explanation. Provide space for additional explanation.

Most attendees are pressed for time, so a fill-in-the-blank survey is usually out of the question. However, a few attendees might be willing to tell you even more if you give them the opportunity.

Consider leaving a bit of room at the end of the survey for additional explanation, rather than after each question, as attendees might bog down trying to add more information to one answer and then abandon the survey altogether. Use the explanation space to pose a question about a general topic or allow attendees to comment about the survey or a particular topic of their choosing.

Ask for demographic data at the end. Ask for demographic data at the end.

Research shows that respondents are more likely to complete surveys fully if the survey starts with pertinent, opinion-related questions rather than mind-numbing demographic data. So if possible, eliminate demographic questions by linking information from attendees' trade show badges to the survey either electronically or by simply swiping badges with a card reader, printing out the data, and stapling it to the written survey.

If you must ask a host of demographic questions, put them at the end of the survey. And if you need a few demographic questions up front to tailor the survey and/or eliminate questions for particular respondents, limit the number of questions to a bare minimum.

Pilot test your survey before using it. Pilot test your survey before using it.

You test drive everything from cars, to cell phones, to shoes, to televisions. So why not your exhibit survey? Give it a good test run to work out the kinks - and maybe even spot a typo or two - before you turn it loose on survey respondents.

Test the survey on a focus group, or ask some of your best customers to take the survey online and provide feedback about everything from content to format. Customers will not only provide the information requested in the survey, they'll feel like VIPs whom you turn to for advice. If focus groups or customer test surveys are out of the question, ask a co-worker to review your survey, as feedback from even one person can often help you clarify or improve questions - and avoid embarrassing typos.

Consider the order of the questions. Consider the order of the questions.

Be aware that the order of your survey can affect the way attendees interpret and respond to your questions. Let's say you ask question one: "Do you support health-care companies offering gifts or perks to physicians who visit their booths?" and then, you ask: "Do you feel that Federal Drug Administration regulations limiting promotional-campaign spending are justified?" Attendees are likely to assume that question No. 2 pertains to exhibits, but you might be asking about promotional campaigns in general. If you'd asked the questions in the reverse order, participants would likely define a "promotional campaign" as any kind of promotion, not just exhibit promotions.

Monitor the length and complexity of questions. Monitor the length and complexity of questions.

The longer and more complex the question, the less likely attendees will answer it completely and accurately. Thus, some experts suggest keeping each survey question to roughly 20 words or less.

Psychology studies show that the human brain has a hard time simultaneously tracking any more than seven ideas or concepts. So also limit any multiple-choice answers to seven options or less, and keep each option to roughly the same length and complexity to provide an apples-to-apples comparison.

Consider offering an incentive.

Convincing attendees to take time out of their busy day to complete your survey is always a challenge. However, a simple inexpensive incentive, such as a T-shirt, $5 gift card, or even a five-minute massage, can significantly increase your number of survey participants. Plus, such an incentive can improve the quality of attendees' answers, as they often feel they're making a fair exchange of quality information for the incentive. Consider offering an incentive.

Convincing attendees to take time out of their busy day to complete your survey is always a challenge. However, a simple inexpensive incentive, such as a T-shirt, $5 gift card, or even a five-minute massage, can significantly increase your number of survey participants. Plus, such an incentive can improve the quality of attendees' answers, as they often feel they're making a fair exchange of quality information for the incentive.

Avoid common question-writing mistakes. Avoid common question-writing mistakes.

While the previous suggestions provide overall survey guidelines, here are a few formatting and phrasing problems to avoid.

Double-Barreled Questions. To eliminate confusion, avoid questions that ask for opinions about two different things, such as: "Do you believe that the ABC widget is safe and cost effective?" The issues of safety and cost are two different things, requiring two different questions. Double-Barreled Questions. To eliminate confusion, avoid questions that ask for opinions about two different things, such as: "Do you believe that the ABC widget is safe and cost effective?" The issues of safety and cost are two different things, requiring two different questions.

Biased Questions. Make sure that the wording of the question doesn't lead the respondent to a particular conclusion. Don't ask questions such as: "Given the failure of national health-care reform, do you feel congress should stop pursuing this matter?" The question asserts that reform has been a failure, so few respondents will agree with something that's already deemed a flop. Biased Questions. Make sure that the wording of the question doesn't lead the respondent to a particular conclusion. Don't ask questions such as: "Given the failure of national health-care reform, do you feel congress should stop pursuing this matter?" The question asserts that reform has been a failure, so few respondents will agree with something that's already deemed a flop.

Double Negatives. To avoid confusing attendees, don't ask respondents if they are in agreement with a statement that contains the word "not." Avoid questions such as: "Do you agree or disagree that the new Turbo Booster is not cost efficient?" Rather, ask: "Is the new Turbo Booster cost efficient?" Double Negatives. To avoid confusing attendees, don't ask respondents if they are in agreement with a statement that contains the word "not." Avoid questions such as: "Do you agree or disagree that the new Turbo Booster is not cost efficient?" Rather, ask: "Is the new Turbo Booster cost efficient?"

Loaded Questions. Avoid questions that only present one side of an issue, such as: "Do you support eliminating free animal vaccinations in our city to help balance our budget?" This doesn't give respondents an opportunity to state their opinions. A better solution would be: "Some people want to eliminate free animal vaccinations to help balance our city budget. Others suggest reducing spending through other means. What do you think?" Loaded Questions. Avoid questions that only present one side of an issue, such as: "Do you support eliminating free animal vaccinations in our city to help balance our budget?" This doesn't give respondents an opportunity to state their opinions. A better solution would be: "Some people want to eliminate free animal vaccinations to help balance our city budget. Others suggest reducing spending through other means. What do you think?"

Determiners. Avoid using terms such as always, never, and only. In this question, "Do you always take your medication at the required scheduled times?" the "always" means a patient who only missed one dose would have to answer "no." Determiners. Avoid using terms such as always, never, and only. In this question, "Do you always take your medication at the required scheduled times?" the "always" means a patient who only missed one dose would have to answer "no."

Overlapping Responses. Make sure multiple-choice answers are mutually exclusive. For example, the following responses overlap: a) one hour or less, b) one to three hours,

c) three or more hours. If your answer is one hour, both "a" and "b" are correct, and if your answer is three hours, both "b" and "c" are correct. Overlapping Responses. Make sure multiple-choice answers are mutually exclusive. For example, the following responses overlap: a) one hour or less, b) one to three hours,

c) three or more hours. If your answer is one hour, both "a" and "b" are correct, and if your answer is three hours, both "b" and "c" are correct.

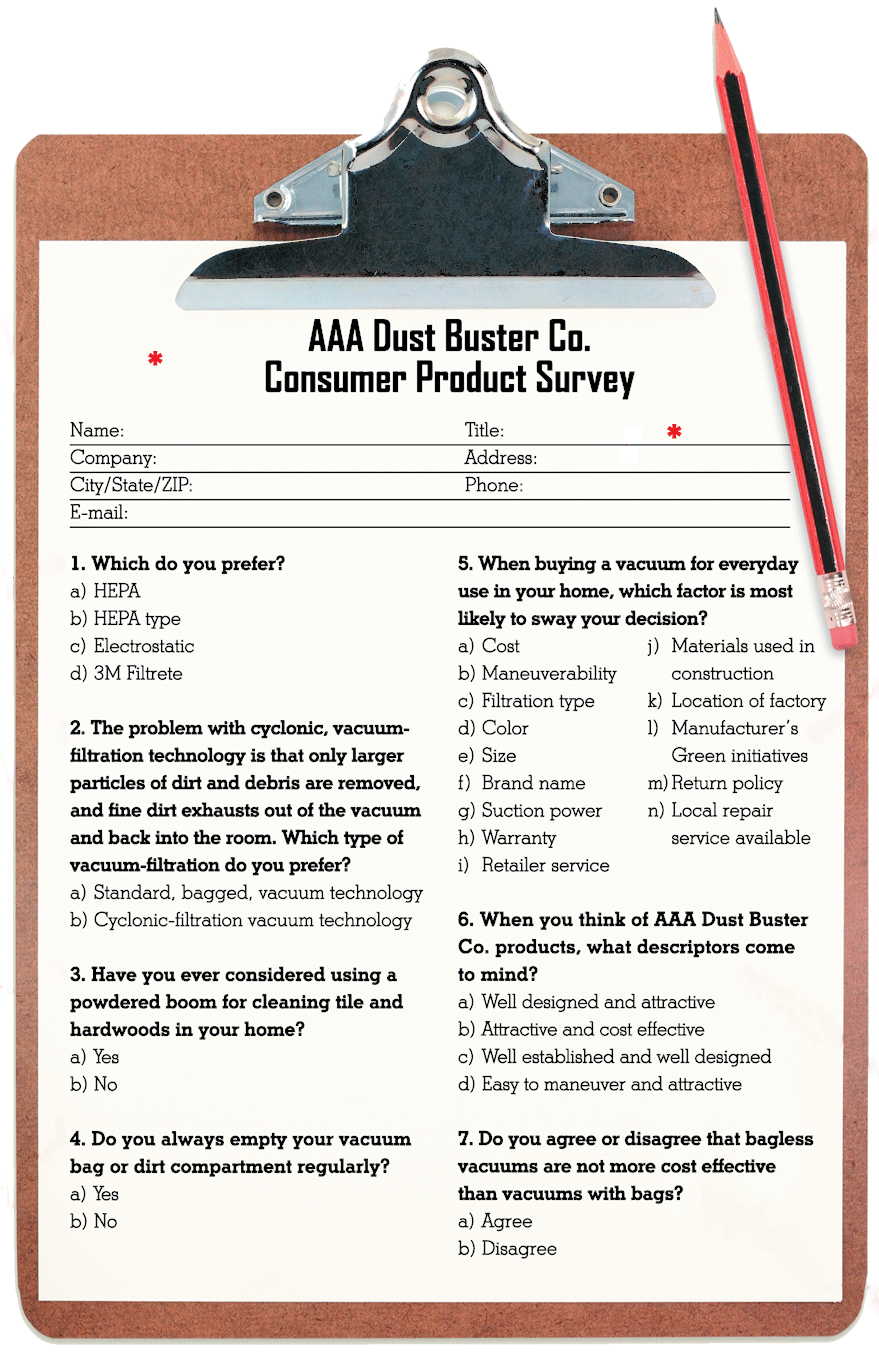

The following sample illustrates the do's and don'ts of survey writing. After reviewing it, look for ways to apply the tips to your own survey. e

|

|

Sample Survey

The key to a successful survey is knowing what to ask and how to phrase it. Check out what not to do, and why, in the following example.

* This survey is void of instructions of any kind. Should participants pick just one answer, or can they select multiple answers? How should they indicate their responses, and is there additional room for comments at the end? Include simple, direct instructions at the beginning of the survey and/or provide any answer variations with respective questions.

1. If you're polling average consumers, many will not understand that these are different types of vacuum-technology options. Plus, respondents may prefer different filtration options in different circumstances, and no specific circumstance is indicated. Rather, ask: "Which vacuum-technology option do you prefer for everyday home use?" Then briefly explain each filtration type.

2. This question is biased. Since cyclonic technology appears to have a problem, most participants will select any answer except cyclonic filtration.

3. If the author of this survey had asked someone to preview the survey before using it, he or she likely would have caught the typo and changed "powdered boom" to "power broom."

4. Since "always" is part of the question, if the respondent completed this task 99.9 percent of the time, the correct answer would still be "no." A better option would be to ask: "How often do you empty your vacuum bag or dirt compartment?" Then provide options such as: a) once a month, b) twice a year, c) once a year, d) almost never, I wait until the vacuum loses suction.

* Survey participants want to "get in and get out" of the survey as fast as possible, which means they don't want to get bogged down in demographic information right up front. If you must include demographic information on the survey, ask for it at the end, or better yet, link the survey to an electronic badge-swiping technology of some sort.

5. While these options are fairly simple to understand, most people will need to read the list of 14 items several times to answer the question, thereby increasing the chances that they'll make a rash decision to speed completion or abandon the survey altogether. Try to stick to seven options or less to avoid these issues. Since decisions are rarely made on one factor alone, you may even consider having respondents rank their top three factors so you understand which elements are considered and to what level of importance.

6. This question contains overlapping responses that will confuse the participant. Plus, pairing two different concepts into the same answer can also confuse the reader and increase the chances of obtaining inaccurate survey responses.

7. This double-negative question confuses respondents. Change the question to something such as: "Do you believe bagless vacuums are more cost effective? a) Yes or b) No."

|

f you don't ask, you'll never know. While this adage applies to countless situations, it's perfectly suited to the exhibit environment. If you don't ask customers and prospects what they think - about everything from your products, to your brand, to your exhibit staffers - you'll never know. And if you don't query your customers as least once in a while, your program will be running blind, leaving you guessing about where you could have made improvements to obtain greater success, or worse yet, where you went horribly wrong.

f you don't ask, you'll never know. While this adage applies to countless situations, it's perfectly suited to the exhibit environment. If you don't ask customers and prospects what they think - about everything from your products, to your brand, to your exhibit staffers - you'll never know. And if you don't query your customers as least once in a while, your program will be running blind, leaving you guessing about where you could have made improvements to obtain greater success, or worse yet, where you went horribly wrong.  Limit the scope of the survey.

Limit the scope of the survey. Use brief, tightly focused questions.

Use brief, tightly focused questions.  Include simple instructions.

Include simple instructions. Provide space for additional explanation.

Provide space for additional explanation. Ask for demographic data at the end.

Ask for demographic data at the end.  Pilot test your survey before using it.

Pilot test your survey before using it. Consider the order of the questions.

Consider the order of the questions. Monitor the length and complexity of questions.

Monitor the length and complexity of questions. Consider offering an incentive.

Convincing attendees to take time out of their busy day to complete your survey is always a challenge. However, a simple inexpensive incentive, such as a T-shirt, $5 gift card, or even a five-minute massage, can significantly increase your number of survey participants. Plus, such an incentive can improve the quality of attendees' answers, as they often feel they're making a fair exchange of quality information for the incentive.

Consider offering an incentive.

Convincing attendees to take time out of their busy day to complete your survey is always a challenge. However, a simple inexpensive incentive, such as a T-shirt, $5 gift card, or even a five-minute massage, can significantly increase your number of survey participants. Plus, such an incentive can improve the quality of attendees' answers, as they often feel they're making a fair exchange of quality information for the incentive. Avoid common question-writing mistakes.

Avoid common question-writing mistakes.

Jeff Kidd is the vice president of Atlanta-based BrightPipe,

Jeff Kidd is the vice president of Atlanta-based BrightPipe,